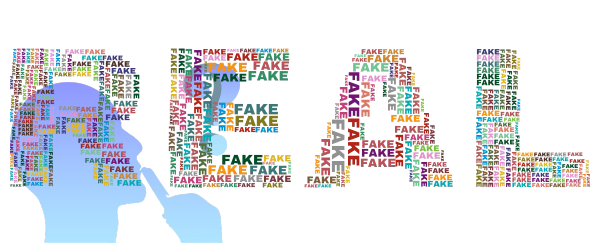

Paraphrasing a famous quote by Mark Twain: A fake video can make it half way around the world before social media even has time to identify it (let alone perhaps take it down).

Another dimension to deepfakes – voice clones. A trope in crime and spy thrillers. And now putting voice artists’ jobs at risk. But also as part of political misinformation campaigns. With economic consequences.

With readily available online voice cloning software, does hearing = believing on social media? Or, just more FUD in the zone.

• Washington Post > “AI voice clones mimic politicians and celebrities, reshaping reality” by Pranshu Verma and Will Oremus (October 15, 2023) – Experts have long predicted generative artificial intelligence would lead to a tsunami of faked photos and video. What’s emerging is an audio crisis.

Rapid advances in artificial intelligence have made it easy to generate believable audio, allowing anyone from foreign actors to music fans to copy somebody’s voice — leading to a flood of faked content on the web, sowing discord, confusion and anger.

On Thursday, a bipartisan group of senators announced a draft bill, called the No Fakes Act, that would penalize people for producing or distributing an AI-generated replica of someone in an audiovisual or voice recording without their consent.